NVIDIA has poured an eye-watering €9.36 billion into developing the Blackwell GPU, yet Jim Keller believes that a modest €1.17 billion budget would have been ample. This critique emerges as NVIDIA's Blackwell GPU, engineered for top-tier AI applications, is priced between € 35,100 and €46,800 per unit, securing its spot as one of the most advanced yet most expensive GPUs on the market.

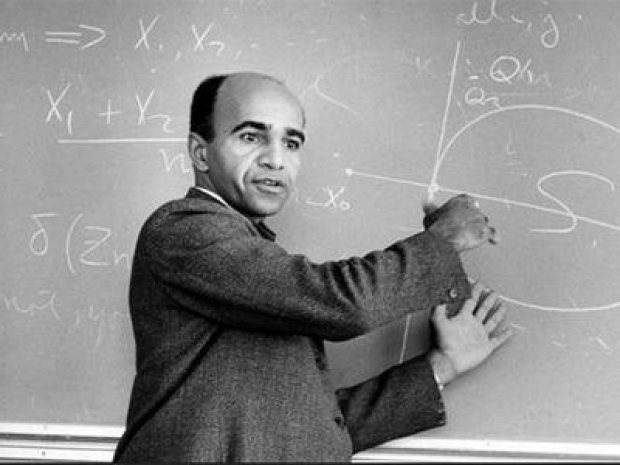

Christened after the mathematician David Harold Blackwell, NVIDIA's Blackwell GPU marks a monumental advance in processing capability and efficiency. This pioneering AI GPU, developed on TSMC’s 4NP process, integrates two chips, boasting 208 billion transistors. This configuration delivers an extraordinary AI computing capacity, estimated at 20 petaflops of AI performance per unit, quintupling that of its predecessor, the H100.

Despite these breakthroughs, Keller's critique hones in on the economic efficiency of NVIDIA's investment. He suggests that the company's substantial R&D expenditure could have been significantly trimmed without diminishing the technological prowess of the Blackwell series.

A proponent of open standards, Keller has argued that NVIDIA should have implemented Ethernet protocol chip-to-chip connectivity in its Blackwell-based GB200 GPUs for AI and HPC. He argues that this move could have cut costs for NVIDIA and its hardware clientele and simplified the transition of software across different hardware platforms—a versatility NVIDIA may not be too keen on endorsing.

NVIDIA, however, stands by its investment as crucial to maintaining its edge and leadership in the competitive realms of AI and gaming, where it continues to vie with rivals like AMD.

The Blackwell GPU is a titan in performance and strategic market positioning. NVIDIA has shifted from peddling individual GPU units to incorporating them into larger systems such as the DGX B200 servers and even more expansive configurations known as SuperPODs, tailored for intensive computing tasks like training large-scale AI models.

This strategic evolution underscores NVIDIA's commitment to dominating the AI hardware sector. It aims to reap significant returns on investment through high-value, integrated solutions rather than mere component sales. This approach is reflected in its product pricing and the integration of its GPUs into comprehensive computing systems, vital for tasks demanding immense computational resources.

Keller's assessment of NVIDIA's financial strategy in creating the Blackwell GPU sparks a broader conversation about cost-efficiency and innovation in the high-stakes tech industry, where balancing R&D investment and fiscal caution remains a pivotal challenge for leading companies.

Ethernet, a widely adopted technology at both the hardware and software levels, competes with NVIDIA's low-latency, high-bandwidth (up to 200 GbE) InfiniBand interconnection for data centres. In terms of performance, Ethernet, particularly its forthcoming 400 GbE and 800 GbE versions, can hold its own against InfiniBand.

However, InfiniBand still holds certain advantages in features relevant to AI and HPC and superior tail latencies, prompting some to argue that Ethernet's capabilities don't quite meet the needs of emerging AI and HPC workloads. Meanwhile, the industry, led by AMD, Broadcom, Intel, Meta, Microsoft, and Oracle, is advancing Ultra Ethernet interconnection technology, which is anticipated to offer higher throughput and features for AI and HPC communications. Indeed, Ultra Ethernet is set to become a formidable rival to NVIDIA's InfiniBand for these workloads.

To be fair, Tenstorrent competes against Nvidia, so Keller might have a motive for saying what he does.